Ah, how wonderfully easy Microsoft makes it to interoperate with “legacy” COM components from managed code (.Net). So easy in fact that one might completely overlook the fact that an apparently managed API is actually just a thin shim over a COM API. Unfortunately, one cannot safely ignore the COM-ness of these APIs; some idiosyncrasies “bleed” into your code, particularly those related to memory allocation and lifetime management. The Visual Studio Tools for Office (VSTO) add-in APIs are a good example of this.

Earlier this year I wrote a fairly simple status reporting application using InfoPath, SharePoint and Excel. Users fill out weekly InfoPath status report forms, which they then submit to SharePoint. A manager can then open one of the InfoPath forms from the Windows Shell (using SharePoint’s WebDAV capabilities) and use InfoPath’s built-in “Export to Excel” capabilities to do a bulk import of the status report XML data into an Excel workbook. I wrote approximately 800 lines of VBA (yes, I did just say “VBA”) code to sort, filter, format and analyze the imported Excel data so that a manager can visually identify areas of risk.

VBA is not the most modern or elegant language, but the tools are mature and it gets the job done for simple applications. Here is an example of a VBA function that modifies the color of the “Priority” field of a Activity record:

Sub ColorPriority(SheetName As String)

'Critical: Red

'High: Orange

Worksheets(SheetName).Select

nCol = Col("Priority")

For nRow = 2 To ActiveSheet.UsedRange.Rows.Count

Select Case Cells(nRow, nCol).Value

Case "Critical":

Cells(nRow, nCol).Interior.Color = RGB(255, 0, 0)

Cells(nRow, nCol).AddComment ("Priority is Critical. Typically this indicates a status item that needs follow up.")

Case "High":

Cells(nRow, nCol).Interior.Color = RGB(255, 102, 0)

Cells(nRow, nCol).AddComment ("Priority is High. Typically this indicates that the status of this item should be tracked.")

End Select

Next nRow

End Sub

Note: The Col() function is a custom function that I wrote that simply looks up the column number for a given column name in an Excel Table. I still find it strange that a built-in function for this purpose is not provided.

The above code is pretty simple; it expresses the intent with no need for any “plumbing” code.

I then decided that I wanted to make this into a fully-fledged system that enables Excel to connect directly to a SharePoint Forms Library, get a list of submitted status reports, do a custom import of the form XML data, and then process, analyze and format the generated Excel Workbook. Since I also still routinely fall for the “Newer, Sexier Technology MUST Be Better” trap, I decided I would write the whole thing in .Net 4.0. I used the following for the add-in:

- C# for the main add-in code and worksheet processor

- C# and WPF for the add-in controls library

- F# and the SharePoint Foundation Client Object Model for the SharePoint Client library

- F# for the InfoPath XML parser library

- IronPython for a test harness

I started by porting all of the Excel formatting code from VBA to C#. It was dead easy; I simply cut and paste the VBA into Visual Studio as C# comments and then transformed the code in place. As I was doing the port a little voice kept saying “It cannot be this easy; these are really COM APIs after all, and where there is COM there is trouble.” I chose to placate that little voice in the name of expediency by promising that I would come back later and harden and optimize the code, which would include looking into any COM-related issues.

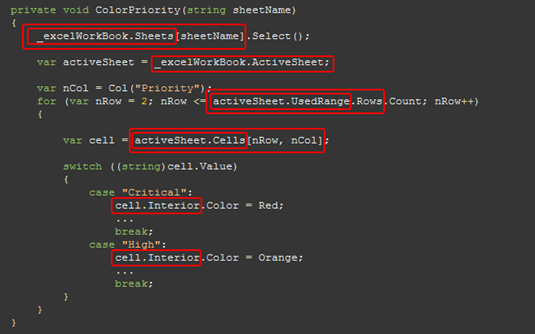

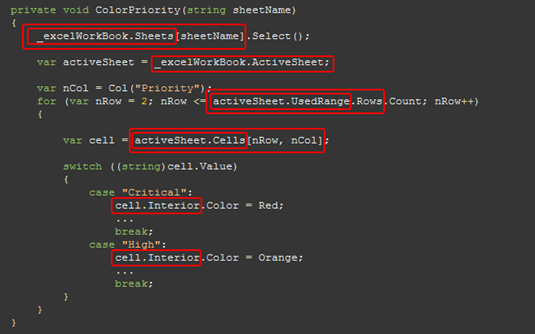

So the C# for the function above VBA become:

private void ColorPriority(string sheetName)

{

//Critical: Red

//High: Orange

_excelWorkBook.Sheets[sheetName].Select();

var activeSheet = _excelWorkBook.ActiveSheet;

var nCol = Col("Priority");

for(var nRow = 2; nRow <= activeSheet.UsedRange.Rows.Count; nRow++)

{

var cell = activeSheet.Cells[nRow, nCol];

switch((string)cell.Value)

{

case "Critical":

cell.Interior.Color = Red;

AddFormattedComment(cell, "Priority is Critical. Typically this indicates a status item that needs follow up.");

break;

case "High":

cell.Interior.Color = Orange;

AddFormattedComment(cell, "Priority is High. Typically this indicates that the status of this item should be tracked.");

break;

}

}

}

Other than the absence of some syntactic sugar provided by VBA, e.g. automatically adding obvious appropriately-named variables to the current scope, the C# code above looks pretty similar to the VBA.

After porting all of the VBA code and developing the rest of the add-in it came time to keep my promise to the little voice by hardening and optimizing the code. I added Asserts that I had missed (Code Contracts would probably have been overkill), error and exception handling, found a number of opportunities for improving the performance by doing operations concurrently, e.g file retrieval and XML parsing, and a number of other performance related optimizations. I then moved onto to looking for COM Interop related issues.

Note: In a effort to improve the overall performance of the add-in I did find a best practice gem. In my original code I was adding individual cells and rows to excel. I found some guidance suggesting that I should build up [multidimensional] arrays that represent the cells and then set the value of an entire range to the array. It significantly improved the overall performance of the add-in. As an example it improved the performance of the InfoPath forms import by a whopping 600%!

Before I go into any details on the solution let’s look at where COM-related issues might show up in the above code. Each of the red rectangles in the following diagram represents a Runtime Callable Wrapper (RCW) which is a .Net object that is a proxy for an underlying COM object. The lifecycle of the underlying COM object is linked to the lifecycle of the RCW. Note that the RCWs in the body of the for loop will be allocated through every execution of the loop. It is also very important to be aware that by simply using the ‘.’ operator one is inadvertently causing additional COM objects to be created; COM objects whose lifetimes are not under the developer’s explicit control by default.

Given that I live in the Internet era of software development the first thing that I did was to do a search online for common COM-related issues in VSTO add-ins. I found a few, but the most common one was the apparent need for deterministic clean-up of COM objects that are instantiated by the managed Office Add-in APIs.

The .Net COM Interop implementation is designed in such a way that it will ultimately take care of releasing the underlying COM object associated with a Runtime Callable Wrapper (RCW), when the RCW is no longer “reachable”, i.e. it has gone out of scope or has been assigned null (in C#). I say “ultimately” because the COM object is released during a lazy process called “finalization”, which may require multiple GCs before it occurs for a given object. Finalization happens on a separate thread and there are no guarantees in what order objects will be finalized. When an RCW is finalized a call to System.Runtime.InteropServices.Marshal.ReleaseComObject is made on the RCW which calls Release on the underlying COM object, which decrements its reference count and probably causes the resources associated with it to be freed. If a developer wants to explicitly release the COM object associated with an RCW they have to explicitly call ReleaseComObject. They also have to have a reference to the object they want to call it on of course. In the code above there are multiple RCWs for which there are no explicit references, i.e. local variables, on which ReleaseComObject can be called. One might think that one could just call it on the previously used object, e.g. "Marshal.ReleaseComObject(activeSheet.UsedRange.Rows), but there is no guarantee that a new RCW won’t be created just so that one can call ReleaseComObject on it. In the above example, if I call ReleaseComObject on only those RCWs that I have assigned to local variables, some COM objects will not be cleaned up until their RCW are finalized.

So, if the .NET Common Language Runtime (CLR) takes care of this for me then why on earth would I ever want to do this myself? Other than simply having more control over the lifetime of the unmanaged resources being used by your application, there are also potential bugs that can be introduced if COM Objects are not deterministically cleaned up. One example that I have seen is that Excel will not quit until all COM objects have been cleaned up, which can result in Excel hanging if it is programmatically shut down but the RCWs are not finalized in a specific order. The common wisdom seems to be that one should always call ReleaseComObject on all RCWs that do not “escape” the scope in which they are declared, in the opposite order in which they were instantiated(despite MSDN suggesting that one should only call ReleaseComObject “if it is absolutely required”). This is typically done in a finally block and requires that local variables are explicitly declared for all RCWs.

So following this guidance I would have to re-write the code above as follows:

private void ColorPriority(string sheetName)

{

Sheets sheets = null;

Worksheet sheet = null;

Worksheet activeSheet = null;

Range usedRange = null;

Range rows = null;

try

{

sheets = _excelWorkBook.Sheets;

sheet = sheets[sheetName];

sheet.Select();

activeSheet = _excelWorkBook.ActiveSheet;

var nCol = Col("Priority");

usedRange = activeSheet.UsedRange;

rows = usedRange.Rows;

for (var nRow = 2; nRow <= rows.Count; nRow++)

{

Range cells = null;

Range cell = null;

Interior interior = null;

try

{

cells = activeSheet.Cells;

cell = cells[nRow, nCol];

interior = cell.Interior;

switch ((string)cell.Value)

{

case "Critical":

interior.Color = Red;

//...

break;

case "High":

interior.Color = Orange;

//...

break;

}

}

finally

{

if(interior != null) Marshal.ReleaseComObject(interior);

if(cell != null) Marshal.ReleaseComObject(cell);

if(cells != null) Marshal.ReleaseComObject(cells);

}

}

}

finally

{

if(rows != null) Marshal.ReleaseComObject(rows);

if(usedRange != null) Marshal.ReleaseComObject(usedRange);

if(activeSheet != null) Marshal.ReleaseComObject(activeSheet);

if(sheet != null) Marshal.ReleaseComObject(sheet);

if(sheets != null) Marshal.ReleaseComObject(sheets);

}

}

Wow, that is far more ugly and verbose code than the original VBA! You may also note that this code introduces a potential bug; is the COM object referenced by activeSheet and sheet actually the same COM object? If they are a System.Runtime.InteropServices.InvalidComObjectException will be potentially thrown when I try to release it a second time. What would be ideal is if I could still deterministically manage the lifetime of the COM objects while maintaining the simplicity of the original VBA code.

The solution I came up with was to create a COM Object Manager class that takes care of all this for me. My solution makes use of the Dispose Pattern, the “using” statement, and C# operator overloading. Using my ComObjectManager my code looks like the following:

private void ColorPriority(string sheetName)

{

//Critical: Red

//High: Orange

using(var cm = new ExcelComObjectManager())

{

(cm < (cm < _excelWorkBook.Sheets)[sheetName]).Select();

var activeSheet = cm < _excelWorkBook.ActiveSheet;

var nCol = ColumnIndex("Priority");

for(var nRow = 2; nRow <= (cm < (cm < activeSheet.UsedRange).Rows).Count; nRow++)

{

var cell = cm < (cm < activeSheet.Cells)[nRow, nCol];

switch((string)cell.Value)

{

case "Critical":

(cm < cell.Interior).Color = Red;

AddFormattedComment(cell, "Priority is Critical. Typically this indicates a status item that needs follow up.");

break;

case "High":

(cm < cell.Interior).Color = Orange;

AddFormattedComment(cell, "Priority is High. Typically this indicates that the status of this item should be tracked.");

break;

}

}

}

}

Yes, this does introduce some new syntax which requires the use of the ‘<’ operator and parentheses, but the code mostly maintains its original simplicity (in my opinion anyway).

Note: The above example demonstrates a potential issue; the example only uses one ComObjectManager for the entire function, so through every iteration of the loop objects are being added to the manager, which effectively “roots” them outside of their original scope. These objects may actually have been finalized earlier if they had not been added to the manager. The solution would be to instantiate a ComObjectManager inside the body of the loop. This is not a rule of thumb though, since there is a significant cost associated with creating the manager and then disposing it. This is something that needs to be tuned depending on the scenario.

The following is a simplified version of the ComObjectManager class. Note: ExcelComObjectManager inherits from ComObjectManager and only adds Excel-specific debugging capabilities.

public class ComObjectManager : IDisposable

{

private Stack<dynamic> _objects;

public ComObjectManager()

{

_objects = new Stack<dynamic>();

}

private bool _disposed = false;

~ComObjectManager()

{

DoDispose();

}

public void Dispose()

{

DoDispose();

GC.SuppressFinalize(this);

}

protected virtual void DoDispose()

{

if(!this._disposed)

{

while(_objects.Count > 0)

{

var obj = _objects.Pop();

if(obj == null) continue;

Marshal.ReleaseComObject(obj);

}

this._disposed = true;

}

}

public static dynamic operator <(ComObjectManager manager, dynamic comObject)

{

if(comObject == null) return null;

if(manager._objects.Contains(comObject))

{

return comObject;

}

manager._objects.Push(comObject);

return comObject;

}

public static dynamic operator >(ComObjectManager manager, dynamic comObject)

{

throw new NotImplementedException();

}

}

The use of the ComObjectManager as implemented above has significant performance penalties. As a performance optimization in my full implementation I have also added code that does the clean up asynchronously using the new System.Thread.Task API. It does improve the performance of the clean-up but the overhead of using this mechanism is still high. One can further improve the robustness of the code by testing to see that the object that is being added is indeed a COM object, since it may happen that a non-COM object is inadvertently added. This however requires a call to Marshal.IsComObject each time an object is added, which is super-expensive and is not worth the performance hit in my opinion.

So why the title of this post? After doing all of this work I came to the conclusion that there is no real utility in using this mechanism in my add-in. The typical use case is that I open Excel, invoke the add-in’s main function, save the resulting worksheet, and then close Excel. How long it takes to import and format the data is far more important than how long it might take to clean-up, and ultimately is irrelevant given that I close Excel when I am done. There may be cases where I would need to use it, e.g. if Excel remained open and an add-in was running continuously on very large worksheets, or in other cases where COM Interop is being used heavily. In this case however this was ultimately an exercise in over-engineering.

It was fun though!