I probably should be writing a post about Microsoft’s SOA and BPM platforms, but I need a breather from that particular topic, so instead I am going to write about my recent frustrations with Processing.js. I was hoping to be able to create some flashy new sketches, but unfortunately my recent experiments have uncovered a critical bug in Processing.js that will only be fixed in the 1.5 release.

My 3 year-old daughter can’t stop talking about snow so I decided to create a little snow generator for her and post it on this blog. I also wanted to experiment with Processing.js’ ability to load SVG files, which can then be used in a sketch. My idea was simple; create an SVG file that contains a number of shapes that can be randomly combined to create snowflake shapes. Then generate a collection of those random shapes and animate them. Not an ambitious project in the least.

My previous experiments with Processing were done with the stable 1.5.1 release of PDE. I thought I would try the latest alpha version of the Processing 2.0 PDE for this experiment, primarily because it has a JavaScript mode, and will export a web page that loads Processing.js and your sketch (and detect the necessary browser capabilities too!). It does not seem to provide an option to embed the sketch script directly in the HTML, so the sketch is always referenced as an external pde file. It took me a couple of hours to create the SVG file in InkScape, and a sketch in PDE that did exactly what I wanted. While prototyping the sketch I was working in the Standard mode, i.e. it generates a Java applet, since that offers the best development-time performance.

When, after completing the sketch, I changed to the JavaScript mode my sketch failed to pretty much do anything other than draw the background gradient.

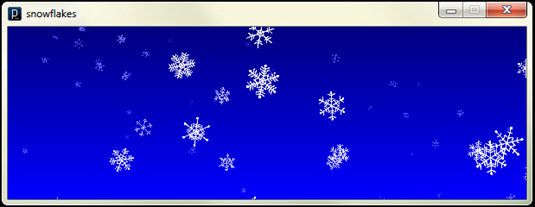

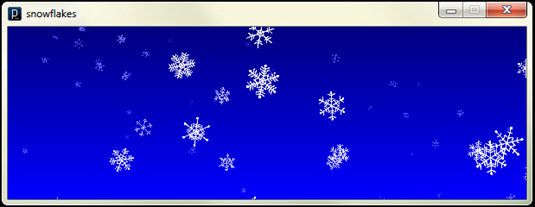

The original sketch looks like this when running:

Processing provides a loadShape method that takes the path or URL to an SVG file, parses the SVG, and generates Processing-native PShape objects. There is currently no way to load SVG elements that are embedded directly in the HTML. Hopefully this will come in a future version of Processing.js. Processing also provides a getChild method to get shapes nested within the root PShape. PShapes can be drawn directly to the screen or drawn off-screen to a PGraphics object which can then be used at some later time to draw to the screen by calling the image method.

To generate my snowflakes I created an array of PGraphics objects (each with a little wrapper) and then drew random snowflakes to each. I also added some noise and toy physics to make the whole thing a little more realistic. It looked great in PDE.

Note: I initially was sorting the array from smallest to largest and then drawing them in that order, but after comparing the results I could not see a difference and simply omitted the sort. I had to write my own sort function because the sort implementation that is provided in Processing will only sort arrays of int, float and String.

There was only one small problem; off-screen drawing of PShapes is broken in the current build of Processing.js. I have filed a bug and it looks like this will be fixed for the 1.5 release of Processing.js. So this post obviously does not include the running sketch.

Another Note: I tried using the tint method to modify the apparent brightness of the snowflakes based on their scale each time the snowflake was drawn, rather than explicitly adjusting the stroke color. This KILLED the performance even when it was running in the Standard mode on my quad-core 8GB laptop with hardware-accelerated graphics. Another bug perhaps?

Rather than find a work-around in Processing.js, I will probably try to port this sketch to one of the other JavaScript graphics APIs, like Raphaël for example. And of course I will post the running result and code in some future post on this blog.

Since I cant show the final result, here is my Processing code for the sketch (usual caveats and disclaimers apply):

color bkground = #000080;

color bkground2 = #0000FF;

snowFlakeFactory factory;

snowFlake[] flakes;

void setup()

{

size(600, 200);

smooth();

frameRate(60);

factory = new snowFlakeFactory("snowflakes.svg");

flakes = factory.createFlakes(60);

}

class snowFlakeFactory

{

color _background;

PShape _template,

_spoke,

_centerHex,

_centerCircle,

_star,

_longArms,

_mediumArms,

_shortArms,

_endCircle;

snowFlakeFactory(String templateFileName)

{

_template = loadShape(templateFileName);

_template.disableStyle();

_spoke = _template.getChild("spoke");

_centerHex = _template.getChild("centerHex");

_centerCircle = _template.getChild("centerCircle");

_star = _template.getChild("star");

_longArms = _template.getChild("longArms");

_mediumArms = _template.getChild("mediumArms");

_shortArms = _template.getChild("shortArms");

_endCircle = _template.getChild("endCircle");

}

snowFlake createFlake()

{

snowFlake flake = new snowFlake();

PGraphics graph = createGraphics(450, 450, P2D);

graph.beginDraw();

graph.background(0,0);

graph.smooth();

float br = 4000 * flake._scale;

graph.stroke(br, br, 255);

graph.noFill();

graph.strokeWeight(13);

graph.shapeMode(CORNERS);

radialDraw(graph,_spoke, 225, 225, 0);

if (heads())

{

if (heads())

{

graph.shape(_centerHex, 225, 225);

}

else

{

graph.shape(_centerCircle, 225, 225);

}

}

if (heads()) graph.shape(_star, 225, 225);

if (heads()) radialDraw(graph,_endCircle, 225, 225, 190 );

PShape[] arms = {

_longArms, _mediumArms, _longArms

};

if (heads()) radialDraw(graph,arms[(int)random(0, 2)], 225, 225, 100);

if (heads()) radialDraw(graph,arms[(int)random(0, 2)], 225, 225, 130);

if (heads()) radialDraw(graph,arms[(int)random(0, 2)], 225, 225, 160);

graph.endDraw();

flake._image = graph;

return flake;

}

snowFlake[] createFlakes(int flakeCount)

{

snowFlake[] flakes = new snowFlake[flakeCount];

for (int i = 0; i < flakeCount; i++)

{

flakes[i] = createFlake();

}

return flakes;

}

void radialDraw(PGraphics graph, PShape feature, float originX, float originY, float rad)

{

float xOffset = rad * cos(PI/6);

float yOffset = rad * sin(PI/6);

graph.shape(feature, originX, originY + rad);

feature.rotate(PI/3);

graph.shape(feature, originX - xOffset, originY + yOffset);

feature.rotate(PI/3);

graph.shape(feature, originX - xOffset, originY - yOffset);

feature.rotate(PI/3);

graph.shape(feature, originX, originY - rad);

feature.rotate(PI/3);

graph.shape(feature, originX + xOffset, originY - yOffset);

feature.rotate(PI/3);

graph.shape(feature, originX + xOffset, originY + yOffset);

feature.rotate(PI/3);

}

}

class snowFlake

{

float _posX;

float _posY;

float _scale;

float _rotation;

PGraphics _image;

snowFlake()

{

_posX = random(width);

_posY = random(height);

_scale = heads()? random(0.01,0.03) : random(0.04,0.1);

_rotation = random(0, PI/6);

_image = null;

}

void drawFlake()

{

pushMatrix();

translate(_posX, _posY);

scale(_scale);

rotate(_rotation);

image(_image, -225, -225);

popMatrix();

}

}

void draw()

{

drawBackroundGradient(bkground,bkground2);

for(int i = 0; i < flakes.length; i++)

{

snowFlake flake = flakes[i];

float gravity = flake._scale * (10 + random(0,5));

float wind = flake._scale * (5 + random(-2,2));

flake._posY += gravity;

flake._posX += wind;

flake._rotation += 0.01;

flakes[i].drawFlake();

if(flake._posY &rt; height + 20) flake._posY = -20;

if(flake._posX &rt; width + 20) flake._posX = -20;

}

}

void drawBackroundGradient(color c1, color c2)

{

noFill();

for (int i = 0; i <= height; i++) {

float inter = map(i, 0, height, 0, 1);

color c = lerpColor(c1, c2, inter);

stroke(c);

line(0, i, width, i);

}

}

float _prob = 0.75;

boolean heads()

{

float rand = random(0, 1);

return (rand < _prob);

}

And here is the SVG:

<svg id="snowflakeTemplate" xmlns="http://www.w3.org/2000/svg" height="1000" width="700" version="1.1">

<g id="mainLayer" stroke="#000" stroke-miterlimit="4" stroke-dasharray="none" fill="none">

<path id="spoke" stroke-linejoin="round" d="M0-0,0,190" stroke-linecap="round" stroke-width="10"/>

<path id="centerHex" stroke-linejoin="miter" d="m-36.48-23.229,0,44.146,36.184,20.891,36.775-21.232,0-41.606-35.987-20.777z" stroke-linecap="butt" stroke-width="10"/>

<path id="star" stroke-linejoin="round" d="M53.858-31.273,49.838-86.48-0.015-62.659l-50.032-24.126-4.3663,55.188-45.979,31.37,45.814,31.287,4.3801,55.416,49.918-23.819,50.097,24.128,4.4815-55.112,46.094-31.294z" stroke-linecap="round" stroke-width="10"/>

<path id="longArms" stroke-linejoin="round" d="M49.142,11.646,0-11.646-49.142,11.559" stroke-linecap="round" stroke-width="10"/>

<path id="mediumArms" stroke-linejoin="round" d="M28.782,6.4858,0-6.4858-28.782,6.3986" stroke-linecap="round" stroke-width="10"/>

<path id="shortArms" stroke-linejoin="round" d="M-12.226,2.7043,0-2.7915,12.226,2.7915" stroke-linecap="round" stroke-width="10"/>

<circle id="endCircle" cx="0" cy="0" r="10" stroke-width="10"/>

<circle id="centerCircle" cx="0" cy="0" r="36.5" stroke-width="10"/>

<rect id="diamond" stroke-linejoin="round" transform="rotate(45)" height="8" width="8" stroke-linecap="round" y="-4" x="-4" stroke-width="10"/>

</g>

</svg>